Timing is Everything (P2): Navigating Network Based Strategies in a Shifting Geopolitical Landscape

Timing is Everything (P2): Navigating Network-Based Strategies in a Shifting Geopolitical Landscape

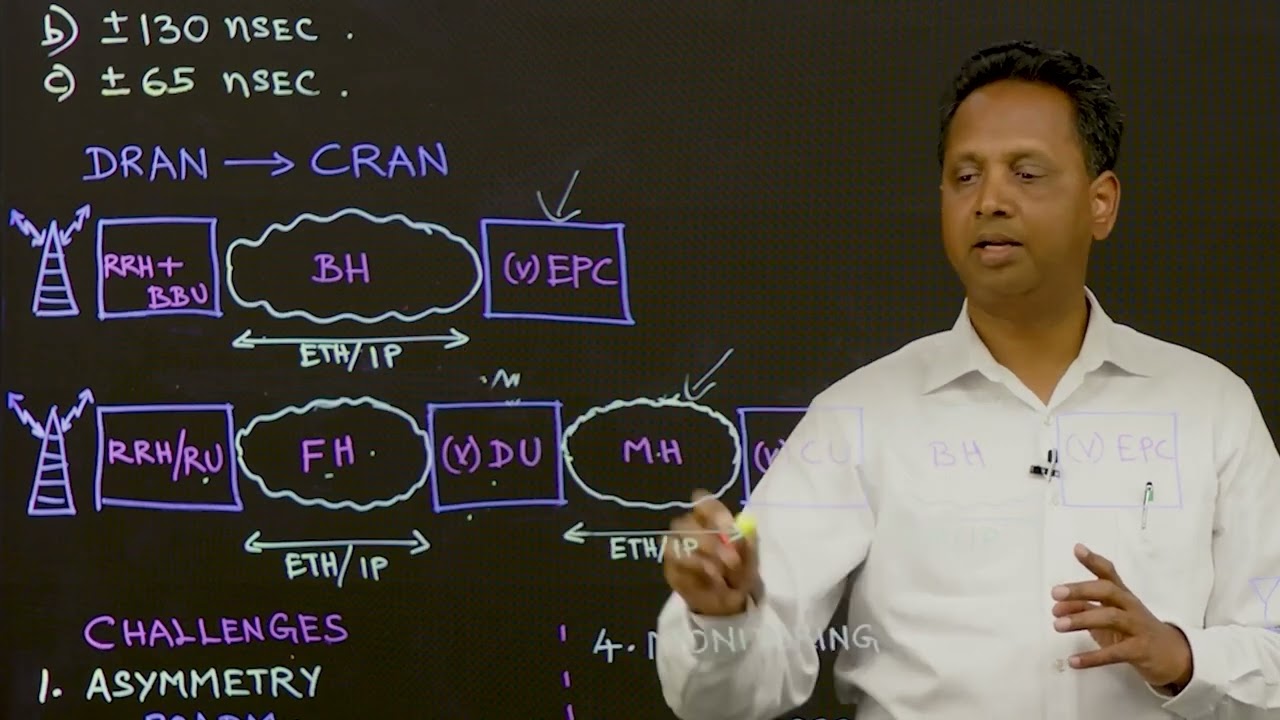

In this video, you’ll discover the critical role of synchronization in 4G and 5G networks. It explores the evolution from Distributed RAN (DRAN) to Centralized RAN (CRAN) and its implications for timing requirements. Learn about the challenges in achieving accurate timing and synchronization in modern networks, along with expert technical recommendations to overcome them.

You’ll learn

The precision timing needs for macro base stations and carrier aggregation in 4G and 5G networks

How synchronization strategies adapt across fronthaul, midhaul, and backhaul segments

About real-world synchronization challenges like asymmetry, GNSS outages, and multivendor environments

Who is this for?

Host

Guest speakers

Experience More

Transcript

0:00 hello and welcome back everyone i'm Rafi

0:03 Pur today's session designed as an

0:05 interactive whiteboard session today

0:07 we're going to talk about timing and

0:09 synchronization in 4G and 5G network

0:11 today I'm joined by my colleague

0:13 Kamachigo Palakrishna hey Cam thanks a

0:16 lot for joining today's session can you

0:18 brief about what you're going to talk on

0:20 today yeah thanks for having me here

0:22 Rafi um so today we are going to talk

0:24 about uh the synchronization

0:26 requirements in 4G and 5G networks then

0:29 we will talk about the DAN to CRAN

0:31 evolution in that aspect really we talk

0:34 about associated synchronization

0:35 requirements again then we talk about

0:37 the challenges in the you know

0:39 synchronization network then how do we

0:41 mitigate that using the SPM and PPM

0:44 features then finally we'll talk about

0:46 the different recommendations model yeah

0:48 thanks a lot Cam that sounds very

0:50 interesting let's dive into that

0:58 so when it comes to synchronization

1:01 requirements for 4G and 5G um the first

1:05 thing we talk about the macrobase

1:06 stations so macro base stations when you

1:09 talk about it it needs like plus or

1:11 minus 1.5 microcond and this is

1:14 basically we are talking about you know

1:16 the air interface of the two base

1:17 stations need to be aligned within plus

1:19 or minus 1.5 microcond which means

1:22 really like you know it basically from

1:24 the grandmaster to the each of the base

1:26 station is aligned with plus or minus

1:29 1.5 microcond then you achieve really

1:31 the same pre uh precision requirement

1:34 between the base stations so the first

1:36 thing really if you really look at it

1:38 you're the plus or minus 1.5 for the

1:40 macrobased station right but then when

1:43 it come to really like you know carrier

1:45 aggregation so carrier aggregation is a

1:47 technology that used by different

1:52 operators especially like you know to

1:55 increase the data download speed or

1:58 upload speed with with respect to the

2:00 one particular subscriber so in this

2:02 case a a subscriber typically connects

2:04 to one base station but in this case the

2:07 subscriber connects to more than one

2:09 base station simultaneously in order to

2:12 really increase its data throughput and

2:14 when it comes to carrier aggregation

2:16 there are two parts or two requirements

2:18 FR1 and FR2 both of them basically like

2:22 what we talk about at the radio

2:23 interface level how much precise it has

2:26 to be so one is plus or minus uh 130

2:29 ncond the another one is the plus or

2:32 minus 65 ncond now in this one if you

2:35 really look at it the plus or minus 130

2:38 or 65 ncond need not to be all the way

2:40 from the grandmaster to the base station

2:43 it is the requirement between the two

2:45 radio unit connecting common boundary

2:48 clock so which means really like you

2:51 know if it is as long as the radio

2:53 interface of the two colloccated base

2:56 station where you want to achieve the

2:57 carrier aggregation they need to be

2:59 connected I mean the closest uh common

3:02 boundary clock or the time source is

3:04 good

3:09 enough so the DAN to CN evolution is

3:13 really like you know a very different uh

3:16 ball game especially when it comes to

3:17 synchronization

3:19 so if you really look at it the DRAN

3:21 architecture in the distributed RAN

3:23 architecture you have a BBU and RR

3:26 together kind of the radio uh ED and

3:29 then the you know the BBU unit then you

3:32 have a back hole then you have a EPC so

3:35 typically the back all used to be the

3:37 Ethernet IP transport and the

3:39 synchronization predominantly can be

3:41 directly sourced from the base station

3:44 itself which is a GNSS receiver or some

3:47 model or you can bring in the

3:49 synchronization something like you know

3:51 from here and then drive the

3:53 synchronization but when it comes to

3:55 really CRAN which is a cloudr run

3:56 architecture it kind of really split the

3:59 BBU and RR into a sub uh splits or uh

4:03 divisions where you can see that the BBU

4:07 is further split into DU and CU the DU

4:10 is basically a distributed unit and then

4:11 the CU is a centralized unit and then

4:14 the everything else actually shifted

4:16 here so the back hole basically this

4:18 backall has come here between the CU and

4:21 EPC then this BBU is split into the

4:25 midall and frontal so the typically like

4:28 you know between the RU and DU what we

4:30 call as a frontal in a CRAN architecture

4:32 and then between the DU and CU we call

4:35 actually as a midall and then from CU to

4:37 the EPC we say like actually I mean

4:40 that's the original one which is a

4:41 backall now when it comes to

4:43 synchronization in case of the split

4:46 model of the you know CRAN model

4:48 predominantly now everything is Ethernet

4:51 transport right so that means really

4:53 like you know you can have a

4:54 synchronization starting here in the

4:56 front all itself or you can start from

4:59 the middle or you can start from the

5:01 back all right and again it purely

5:03 depends on what is your synchronization

5:06 budget is and how many ops of transport

5:09 network I mean elements you know between

5:12 your source of the time to the radio

5:14 base station right it purely depends on

5:18 uh some of the factors one of them is

5:20 number of hops what the budget you want

5:22 to achieve like what is our symmetry and

5:24 other things but otherwise the CRAN

5:26 really changed the dynamics of you know

5:29 the uh adoption of 1588based

5:33 synchronization in the network and

5:35 mainly like you know it profile 8275.1

5:42 profile the challenges to 5G network or

5:45 even for a 4G network when it comes to

5:48 synchronization the number one is the

5:50 asymmetry so when it when we talk about

5:52 asymmetry there are two three types of

5:55 uh asymmetry we can talk about it one is

5:57 introduced by the rodm the another one

6:00 is a transponder the third one is a

6:02 fiber asymmetry so the rodm and

6:04 transponder typically used in a longall

6:06 network whereas the fiber can be really

6:09 like you know a symmetry introduced in

6:11 in you know a pure ether network

6:14 itself anytime when there is asymmetry

6:17 that translates to off of the asymmetry

6:20 translates to the precision error or

6:22 offset time error on the slave side so

6:24 which means asymmetry must be really

6:26 measured and

6:28 compensated otherwise really like you

6:30 know asymmetry alone will play a huge

6:32 time error in the recovery of the time

6:35 with respect to the slave device the

6:38 second thing is a GNSS outage or

6:41 basically which needs extend hold over

6:44 so anytime GNSS goes down because the

6:46 source of the time which is grandmaster

6:48 clock what we are talking about if it's

6:50 GNSS goes down then basically it's going

6:54 to continue to drift from its really

6:56 like you know this stable clock but then

6:59 to mitigate that you need extended

7:01 holdover support and which is typically

7:03 done using the a CGM or a statamon clock

7:07 backing up the grandmaster when the GNSS

7:10 goes down the third thing is the network

7:13 convergence when we talk about network

7:15 convergence it's not just the very first

7:17 time the network is converging from the

7:19 grandmaster to all the way to the base

7:21 station but we are talking about anytime

7:24 the network switches basically let's say

7:26 a boundary clock here switches from one

7:29 node to another node here right or uh

7:32 the RU really switches from one upstream

7:35 boundary clock to the another boundary

7:37 clock so those transitions also very

7:40 critical during the transition how much

7:42 phase error they introduce and how

7:44 quickly like you know the switch from

7:46 one upstream master to the another

7:47 upstream master is very critical so

7:50 that's what we are talking about network

7:51 convergence then the fourth thing is the

7:54 monitoring

7:55 because sometime really like you know we

7:58 bring the network very quickly in

8:00 operational state but then when

8:02 something goes wrong it's very very

8:05 difficult to really like you know know

8:07 where the failure is so the failure can

8:10 be the node as uh as soon as in the

8:13 front all network or it can be in the

8:15 middleall network or it can be in the

8:17 back all network or really like you know

8:19 the grandmaster itself but then there

8:21 needs to be a end toend sync monitoring

8:24 aspect which needs to really handle this

8:27 you know identify and really like you

8:30 know provide a insight where the

8:32 failures are so the monitoring is one of

8:34 the challenge as of today like you know

8:36 not really like end to end monitoring is

8:38 not kind of available across the vendors

8:41 so that's something as as a you know the

8:45 system is you know going in evolution

8:47 point of view right the last one is the

8:50 multi- vendor and multi-operator network

8:53 so first we'll talk about the multi-

8:55 vendor so the front all devices may be

8:58 from vendor X and middle may be from

9:01 vendor Y and then back all may be some

9:03 other vendor or within the frontal you

9:05 may be having some of them some cells

9:07 site router from vendor one and other

9:08 cells site routers from vendor two or

9:11 even the base station versus the DU

9:13 units may be coming from different

9:14 vendors so now they need to be really

9:17 like working as per the standard the

9:20 protocol definition but then the beyond

9:22 standard there are some of the things

9:24 which basically even standard doesn't

9:26 talk about it right some kind of

9:28 extended failure or some kind of

9:30 switchover and some kind of transitions

9:33 how it has to be handled so that's going

9:35 to be always a challenge for when it

9:37 comes to the operator point of view and

9:39 then the multi- uh operator which is

9:41 basically like you know you have a

9:43 multiple operator operating in the same

9:46 you know ran network and stuff then that

9:48 brings in a completely a different

9:55 challenge so the asymmetry and

9:58 monitoring can be addressed by you know

10:01 set of features uh or what we call you

10:03 know a specialized features one is the

10:06 PPM which is passive port monitoring the

10:08 another one is SPM which is slave port

10:11 monitoring so um in slave port

10:13 monitoring it is coming from the you

10:15 know 1588 standard then eventually ITUD

10:18 also standardized with a little more

10:19 granularity so as part of the slave port

10:22 monitoring each node is trying to

10:24 synchronize with respect to the upstream

10:26 master which is really like you know

10:27 given default but then they continue to

10:31 collect the data and that data also they

10:34 export it so one way is really either a

10:36 telemetry point of view or at least it

10:39 should really store the data within the

10:40 system and you know provide that

10:42 information to the operator which is the

10:44 end user in some form either in a CLI

10:47 show output command or some other form

10:49 the key things that really basically

10:52 computed as part of this particular

10:54 slaveport monitoring feature is one is

10:56 the you know the one-way delay two-way

10:59 delay the time error offset with respect

11:02 to the master and then mean path delay

11:04 so these are the things are really

11:06 computed continuously and then that

11:09 computation is basically happening for

11:11 every 15 minutes data so basically you

11:13 will have every 15 minute computed data

11:16 for up to the entire 24 hours then you

11:18 will have a you know 24-hour averaged

11:21 data for another three days kind of

11:23 thing so this gives really like a

11:25 picture of the entire network or in the

11:27 sense like each node perspective where

11:29 they were in a given point of time so

11:32 for example at a given point of time

11:33 this node reported data says you know

11:36 some 100 nancond whereas this node

11:38 really reports 50 ncond and this node

11:40 really reports 200 ncond then you know

11:43 that there is something really going

11:44 between these three devices at that

11:46 instance of time and you can really

11:48 correlate and see really is it the issue

11:50 starting from the TGM or not so when it

11:53 comes to passive port monitoring it

11:55 basically allows you to not only really

11:59 monitor the network or any phase changes

12:01 happening from one path to the other

12:03 path but it also helps you to do uh find

12:08 the like asymmetry in the network right

12:10 as part of the provisioning process so

12:13 passive port monitoring as a feature

12:15 what it does it's basically not only

12:17 exchanging the PTP packets between the

12:19 slave and master port but also the the

12:23 downstream node is exchanging the

12:25 packets with with respect to the other

12:27 upstream master over the passive port

12:30 and it's basically constantly like you

12:33 know trying to compute the data exchange

12:37 which is the time stamp information

12:38 received on the passive port against the

12:40 slave port and it provides really like

12:42 how of the synchronization received on

12:46 this port versus this port so by knowing

12:48 that the operator can go and really like

12:51 you know configure that offset error as

12:54 a symmetry compensation on that

12:57 particular port which is in this case a

12:58 passive port so that way really like you

13:01 know the asymmetry on the secondary path

13:04 has been really like you know measured

13:05 and compensated without really like

13:08 going to the field or finding out the

13:10 fiber length and stuff but that puts a

13:12 precondition that you know you still

13:14 need to measure on one direction the

13:16 very first direction or very first uh

13:18 link which is the slave port connecting

13:20 to the TGM1 but uh once you've really

13:23 measured it and then really like you

13:25 know you use the other ports for the

13:27 purpose of like provisioning point of

13:28 view and once provisioning is done and

13:31 compensation is done then you continue

13:33 to monitor let's say really like you

13:35 know the base station doing the same

13:37 thing right it is a slave port and a

13:39 passive port and here it is

13:40 synchronizing and here it is really like

13:42 you know continue to monitor now

13:44 suddenly the base station reports really

13:46 like know the offset between these two

13:48 ports going like you know let's say 100

13:50 ncond or thousand nancond then they know

13:53 immediately like there is something

13:54 going wrong of course they may not

13:56 pinpoint is it this link is causing

13:58 issue or this link is causing issue but

14:00 at least they know that the base station

14:02 is really going through some churn as

14:04 with respect to the synchronization